Text Classification in Just 20 Lines of Code

Training a state of the art text classifier with Pytorch and Fastai with minimal setup!

The Setup

After downloading the data, import the fastai text modules as well as pandas to read the csv file. I’ll only be using the training data at this point — it contains enough number of tweets to have both train and validation split adequately distributed.

from fastai.text.all import *path = Path('/storage/Corona_NLP_train.csv')import pandas as pddf = pd.read_csv(path, usecols = ['OriginalTweet', 'Sentiment'], encoding = 'latin1')df.head()The output is the simple text data containing the raw tweet and a sentiment column with five classes: Extremely Positive, Positive, Negative, Extremely Negative, and Neutral.

Making a language model for predicting the next word in the text

Next, we go ahead and make a language model from this dataset. This essentially uses a pre-trained model from fastai to make an encoder that we later use for fine-tuning in order to do the classification.

But first, we load the data as a datablock:

dls_lm = TextDataLoaders.from_df(df, text_col = 'OriginalTweet', label_col = 'Sentiment', valid_pct = 0.20, bs = 64, is_lm = True)Note: We’re keeping the validation split as 20% of the whole train csv file.

If we try to see what this model’s X and y features consists of, we have this:

dls_lm.show_batch(max_n = 1)This is essentially the first part of building our classification pipeline. In this step, we make sure our model knows how to predict the next word (or subword) in a given line of text and hence later we can use it to train a classifier to predict sentiments (meanings) from the text.

Defining the language model is simply:

learn = language_model_learner(dls_lm, AWD_LSTM, drop_mult = 0.3)and then we train it.

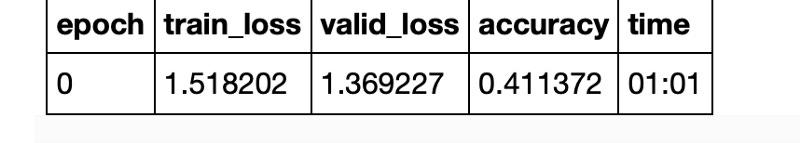

learn.fit_one_cycle(10, 1e-2)Finally, save your model’s encoder (the embedding layers except the last one — the output layer):

learn.save_encoder('finetuned')This is it! Now we can go ahead and train this model with the help of this encoder to perform classification for us!

Training a classifier

Define another data loader first:

dls_clas = TextDataLoaders.from_df(df, valid_pct = 0.2, text_col = 'OriginalTweet', label_col = 'Sentiment', bs = 64, text_vocab = dls_lm.vocab)Now, we just start training!

learn = text_classifier_learner(dls_clas, AWD_LSTM, drop_mult = 0.5, metrics = accuracy).to_fp16()# load our saved encoderlearn = learn.load_encoder('finetuned')I make sure I train with an appropriate learning rate so I plot the loss vs learning rate curve first:

learn.lr_find()We get a curve like this:

This is how we determine that a learning rate of 2e-3 should be a good place to start our training.

Now, we only need to start training by unfreezing a single layer, then two, then some more and then the whole model one by one.

Fit once on the entire model:

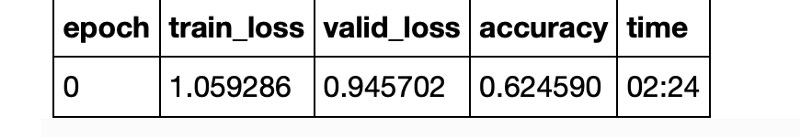

learn.fit_one_cycle(1, 2e-3)Then unfreeze the last two layers:

learn.freeze_to(-2)learn.fit_one_cycle(1, 3e-3)Then the last four:

learn.freeze_to(-4)learn.fit_one_cycle(1, 5e-3)and finally, the whole model:

learn.unfreeze()learn.fit_one_cycle(5, 1e-2)And that is the end result we have!

Try it yourself!

learn.predict('This was a really bad day in my life. My whole family except my dad was infected.')Output:('Extremely Negative', tensor(0), tensor([9.7521e-01, 1.8054e-02, 5.1762e-05, 5.3735e-03, 1.3143e-03]))And voila! We have a text classifier with pretty decent accuracy! Further ahead, we will just need to research and experiment a bit more to build an even better model!

The entire code for this little project is available at: https://github.com/yashprakash13/RockPaperScissorsFastAI

yashprakash13/RockPaperScissorsFastAI

These notebooks contain the code from the medium blog articles from the series: A Fast Introduction to Fastai-My…github.com

Happy learning! 😁